We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

Processing PDFs in the Browser: An Elixir, Rust, and WASM Adventure Part 1

Table of contents:

Intro

A while ago, a great article came out that I caught on the Elixir News newsletter Parsing PDFs in Elixir using Rust

The main gist of it was using NIFs with Elixir to use Rust to extract details out of PDFs and it got me thinking, wouldn’t it be great if we could go one step further, and use straight WASM to process the PDFs on the clientside?

This turned into a much deeper rabbit hole than I expected.

The Beginning

To get started, I wanted to see if I could replicate the setup as closely as possible, with the only difference being having the extraction done on the browser-side rather than the server.

Simple enough, right? Well, yes and no. Here are our tasks:

- Setup up a Liveview file uploader

- Setup a WASM pipeline

- Setup the JS hooks to create a bi-directional flow from liveview to our WASM/Rust

- Setup up the Rust PDF extraction (using extractous, like in the article)

The first task is easy enough. The only difference between my setup and the article was the message passing with the JS hooks to communicate with the WASM binary.

The second one is a bit more tricky, but luckily, I spend a lot of time already in the WASM world and have a pipeline in place I can re-use.

The third task is also fairly straightforward, and luckily I have spent enough time working with getting Typescript setup in phoenix, so this was also a case of re-use.

The last and finaly task, turns out to be the most tricky of them all.

The Hurdle

The original plan was to figure out a minimal port of extractous that would be WASM compatible. Sadly, not possible. The Extractous package uses Apache Tika to be able to handle whatever file type you send at it, and this unfortunately, is not a WASM compatible approach.

We do have alternative options, such as Pandoc with Haskell, which I love, but for the purposes of this experiment, I would like to keep everything in the Rust ecosystem (additionally, there are some pandoc wasm implementations out there, but they do not seem as focused on the extraction of pdfs as they are other formats).

Luckily, there is a project called Shiva that gets us most of the way there.

For now, we are going to build out our own pdf wasm extractor (called extractous_wasm with the eventual intent of porting extractous to wasm). This will allow us to test the core principle of the discussion, which is working with all of the above technologies and outputting a successful extraction of a simple PDF document.

The Setup

You will want to start with a new phoenix project. Then, we want to get a WASM/Rust pipeline setup. I have another blog post specifically related to this topic, that can help you get started with that.

We will continue the rest of this assuming you have a rust package called extractous_wasm in your vendor/ folder.

Rust Setup

Inside of your vendor/extractous_wasm/cargo.toml file, we need to make some updates:

Additionally, we need a global config for our wasm pipeline. Create a .cargo/config.toml file and add the following:

Phoenix Setup

Our phoenix setup will be simple, with a Liveview to upload a file and a hook to handle the file upload and send it to our WASM binary.

You will want to make sure to set the router to let you actually see the page. The Liveview looks as such:

Build

If you followed the instructions in the other blog post, you should be able to run

mix phx_server_with_rust

to have both the phx server running, as well as your rust code being compiled and watched for changes.

Results

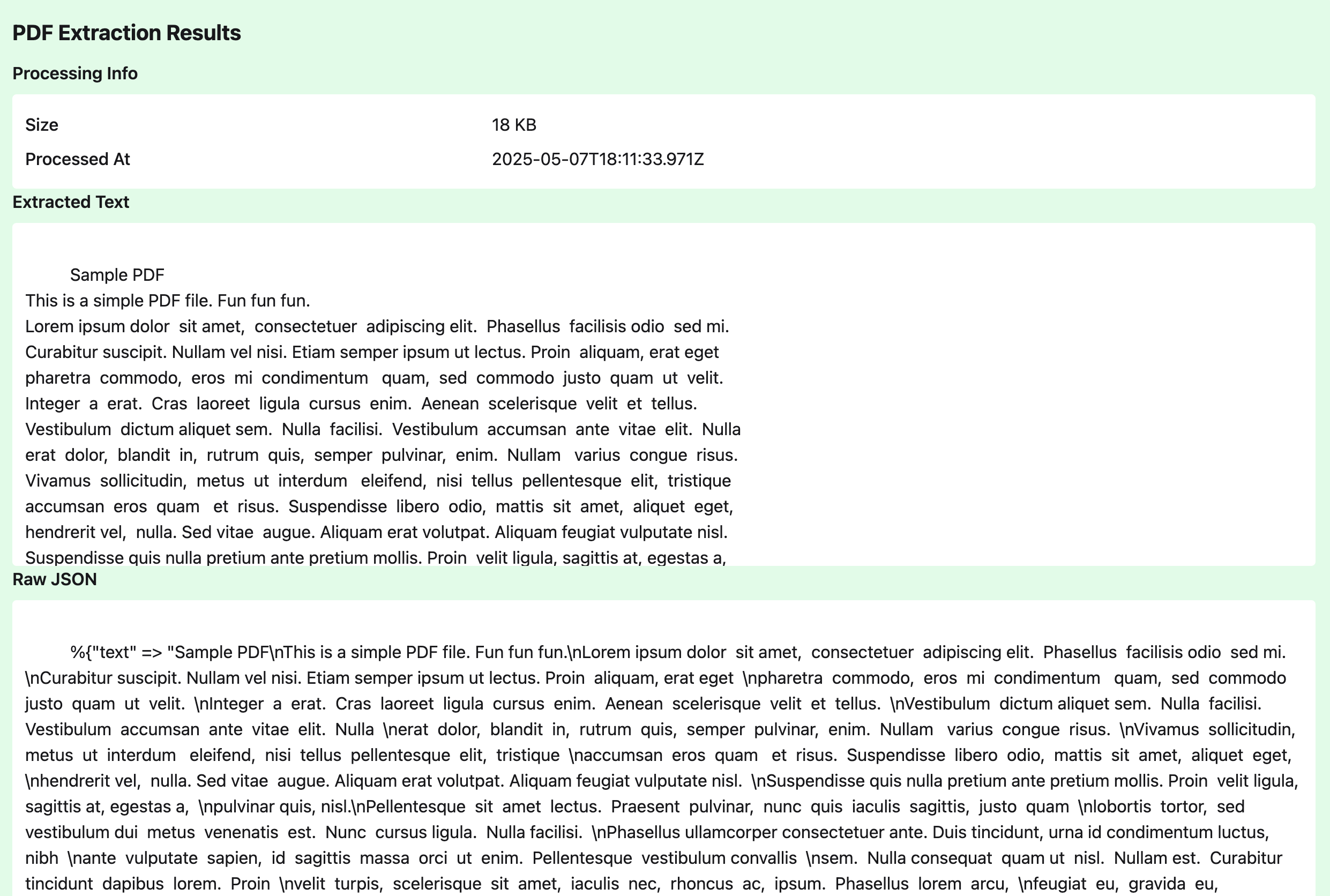

We are using a sample PDF document to test our implementation.

You can download the sample PDF document we are using for testing here.

If everything goes according to plan, you should see the following:

Next Steps

Shiva is a great substitute for handling a wasm based approach, but there are still many deficiencies to be expected with a newer package, compared to Tika (Such as image size handling, which extractous has solutions for, and rendering issues with certain ‘fancy’ pdf types, which manifests with an Identity-H error).

However, we have proven out the basics of our web based PDF parser, the next piece will be to expand the compatibility and functionality,

- Integrate extractous with our wasm pipeline

- Introduce OCR with tesseract-wasm

The next steps will be a discussion for the next blog post on this topic.